Author: TGBarker November 2025 |

It’s astonishing how many daily hacking attempts are made by bots trying to bring down your website,” says Gordon Barker. “TGBSL helps protect your site from being attacked or hacked.”

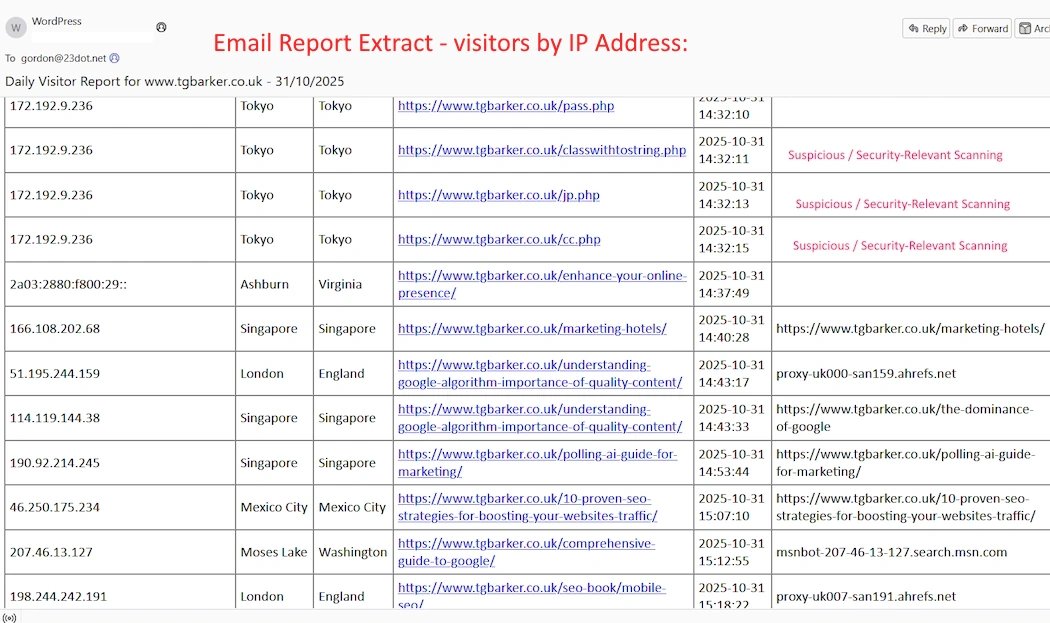

Most website owners only check their analytics to see how many people visited their pages. At TGBarker, we take that one step further — we look at who those visitors really are to be able to make an informed decision. If you think you have been hacked, what are the hacked pages or is it the whole website?

Most website analytics programs used in business provide an owner with analytical data-backed decisions. They normally inform you how many visitors you have had and many other facts. Web analytics tools are made up of software that’s designed to track, measure, and report on website activity, such as site traffic, visitor source, and user clicks. But we also monitor the bad players and stop them from visiting your site.

It started as part of a standard SEO workflow: collecting crawl data to understand which pages Google, Bing, and PetalBot were indexing. But what we discovered in those logs went far beyond SEO — hidden inside the crawl data were the fingerprints of automated scanners, brute-force attempts, and reconnaissance bots quietly testing our website’s defenses.

That’s where the idea for the TGBarker Security Layer (TGBSL) was born to prevent hackers or suspicious players coming from Russia, China or elsewhere?

The Next Step in Website Intelligence

We’ve developed a system that combines data gathering and security reporting into a single process. It doesn’t just count visits; it monitors patterns — spotting unusual traffic, identifying legitimate search engine bots, and flagging potential threats before they become problems.

Our system logs every connection, examines user-agent strings, cross-checks IP addresses, and builds a profile for each visitor. By doing so, it creates a living picture of who’s accessing the website — where a hacking attempt might be coming from not just the predictable rhythm of Googlebot but where the chaotic bursts of unknown IPs in Tokyo or Moscow originated.

This dual-layer approach isn’t just about collecting SEO insights; it’s about early threat detection.

At worst, it can help prevent a coordinated DDoS attempt or mass exploit scan.

At best, it gives us valuable intelligence about how search engines and crawlers are discovering our content — allowing us to fine-tune both our SEO and our server security in the same motion.

When SEO Meets Cybersecurity

Traditional analytics tools like Google Search Console tell you what got crawled; our approach reveals who’s crawling and why.

By blending SEO data with security intelligence, we’ve created a feedback loop where every crawl log serves two purposes:

-

Enhancing visibility in search results, and

-

Protecting the integrity of the site.

The result is a proactive website monitoring system that learns continuously. It treats every bot as a potential signal — either of opportunity or of risk — and it responds accordingly.